So we are back. In my previous article I shared some improvements to my voice assistant connected to a local LLM running on my GPU.

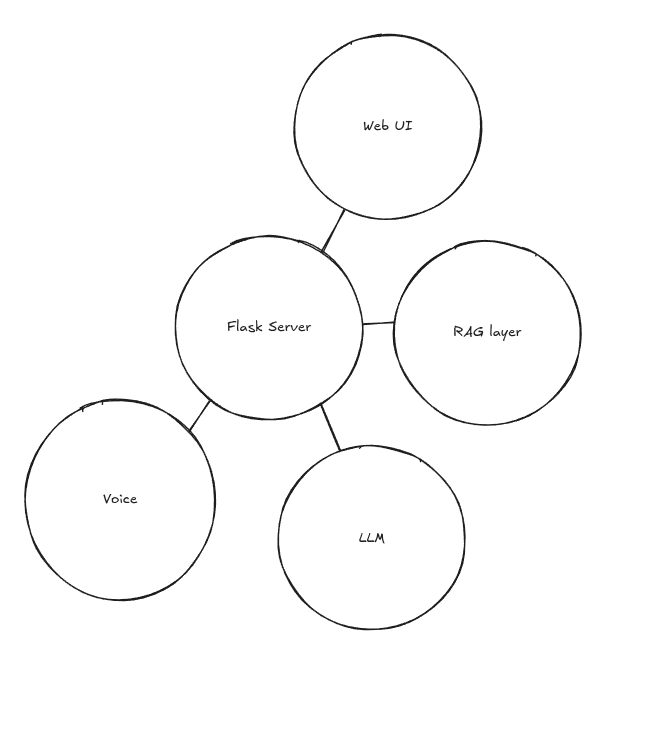

I implemented metrics to track how quickly the supporting processes were running, added a RAG layer to improve prompting the LLM with custom data, and created a Flask server connected to a basic web UI for audio recording.

At the end of that session, I got a bit ambitious with Codex to see how well it would handle a larger implementation like the server and UI. The quality of its output matched the level of depth and specificity I provided—no more, no less.

Tonight, I’m considering redirecting the project back to a command-line tool to keep things simple and focus on the AI functionality. Looking back, it’s clear I had fun building during the last session, but I veered off course in the process.

The goal of this project is to learn more about working with large language models. I had a lot of fun moving fast with Codex but rapid development without a clear cut plan often leads to impulsive decisions.

After a few adjustments, we’re back to a command-line tool. Additionally, I’m putting the voice processing on hold to focus on what I can do with the large language model.

I want this assistant to become a domain expert of sorts. That means I’ll need to gather information and train it. Looking more closely at the AI-generated code, I’m not even fully certain the RAG prompt and retrieval are working. So I’m digging in and coding it myself.

- Checking to make sure the different components work

- RAG prompt returned from the builder is just the text—not the data

- Vector DB file exists from previous training

- Found something. I need a custom mode flag to inject RAG into the prompt

- Adjusted my command to include the flag

- RAG is now working and retrieving documents

- Removed voice functionality

- Tested the model and RAG combo after adding an informational document about my town to the vector DB

- Great—the model is now working with the injected prompt and providing relevant responses

Now that I’ve had a minute to think about the direction of the project, I want to figure out the best way to put this functionality online. I’m excited to take that next step. Looks like the voice assistant is no longer a voice assistant.

I still think there’s value in making an LLM adapt to become a domain expert on specific data.